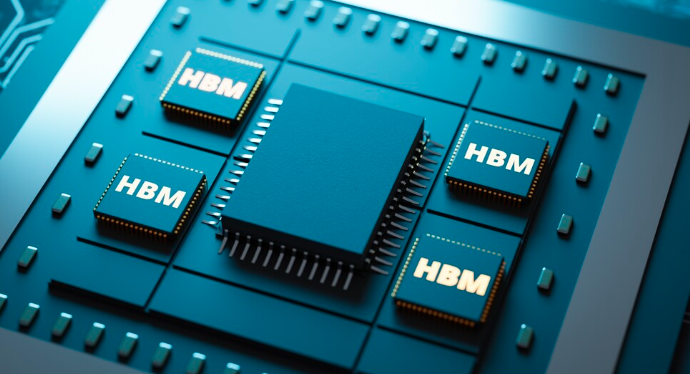

As the appetite for AI chips intensifies, memory behemoths are aggressively advancing their HBM strategies. With a dominant stance in HBM3e, SK hynix has now unveiled further intricacies of HBM4e. According to reports from Wccftech and ET News, SK hynix aims to set itself apart by introducing an HBM variant that encompasses diverse functionalities including computing, caching, and network memory.

Although this notion remains in nascent stages, SK hynix has commenced acquiring semiconductor design IPs to bolster its ambitions, as noted in the aforementioned reports.

Per ET News, the memory colossus aspires to lay the foundation for a multifaceted HBM through its imminent HBM4 architecture. The firm purportedly plans to embed a memory controller onboard, heralding novel computing capabilities with its seventh-generation HBM4e memory.

By harnessing SK hynix’s methodology, the package will transform into an integrated entity. This integration will not only expedite transfer speeds by markedly diminishing structural gaps but also elevate power efficiencies, according to the reports.

Back in April, SK hynix announced a collaboration with TSMC to fabricate next-generation HBM and enhance the integration of logic and HBM through advanced packaging technology. The company intends to progress with the development of HBM4, slated for mass production by 2026, as part of this initiative.

With the recent unveiling of more details regarding HBM4, the memory titan appears poised to extend its preeminent market position in HBM3 by addressing the semiconductor dimensions of the HBM framework, Wccftech noted.

TrendForce’s earlier analysis indicates that as of early 2024, the HBM market landscape is primarily concentrated on HBM3. NVIDIA’s forthcoming B100 or H200 models will incorporate the advanced HBM3e, marking the next progression in memory technology. The current HBM3 supply for NVIDIA’s H100 solution is predominantly fulfilled by SK hynix, leading to a supply deficit amid surging AI market demands.

In late May, SK hynix disclosed yield specifics regarding HBM3e for the first time. A report from the Financial Times highlighted that the memory giant has successfully curtailed the time required for mass production of HBM3e chips by 50%, nearing an 80% target yield.

This article was originally published on trendforce. Read the original article.

FAQs

What is HBM4e?

HBM4e is the fourth generation of High Bandwidth Memory developed by SK Hynix, featuring integrated computing and caching functionalities for enhanced performance and efficiency.

How does HBM4e differ from HBM3?

HBM4e offers higher data transfer rates, better power efficiency, and incorporates computing and caching capabilities, making it a significant improvement over HBM3.

What are the key benefits of HBM4e?

HBM4e provides faster data access, reduced latency, improved power efficiency, and integrated functionalities that enhance the overall performance of computing systems.

When will HBM4e be available?

HBM4e is expected to be ready for mass production by 2026, following further development and refinement in collaboration with TSMC.

How will HBM4e impact AI technology?

HBM4e’s faster data access and lower latency will enable more complex AI models and faster computations, significantly advancing AI technology and applications.